What is LiDAR?

LiDAR stands for Light Detection and Ranging principally works on the idea of sending a laser beam to the target object and calculating the time it takes to reflect from that point. The amount of time the laser pulse takes to return to the source helps in determining the distance of that object. For some of you, the concept might sound familiar to that of RADAR, SONAR as both the technologies are used to map the surrounding objects through radio and sound waves respectively. But wait here is a twist LiDAR helps you to project a 3D model of the target object, which was not possible in both the former technologies.

Why iPhone Using LiDAR?

But the article that I read was about the LiDAR sensor on iPhone. Wait! What? What is the distance measuring sensor doing on a phone? You must be also thinking the same. But like I said LiDAR has a wide range of applications, using in a phone is one of them. LiDAR is basically a Time-of-Flight (ToF) camera. While the other phones just use a single laser beam to track the distance, LiDAR here sends out a train of a laser beam that calculates the distance of the mapped target object in three-dimensional. Better capture in low-light surroundings, enhance night portrait mode features are some of the guaranteed improvisations. The improved accuracy of the camera will definitely give a better AR experience. Well, that’s some good news for gaming enthusiasts and online shoppers.

iPhone has LiDAR, What do Android Phones have in Store for its Users?

The answer is ARCore; it’s a Google brainchild that provides the AR experience for its users. Google’s AR journey started in 2014 with the release of the Tango Platform. It was doing well until 2018 when Google decided to scrap the platform for something better. That is how ARCore came into the picture. ARCore mainly works on the three parameters to get a basic understanding before superimposing the real-life setting with virtual objects: ✔ Motion tracking ✔ Environmental understanding ✔ Light estimation

How ARCore Works for Android?

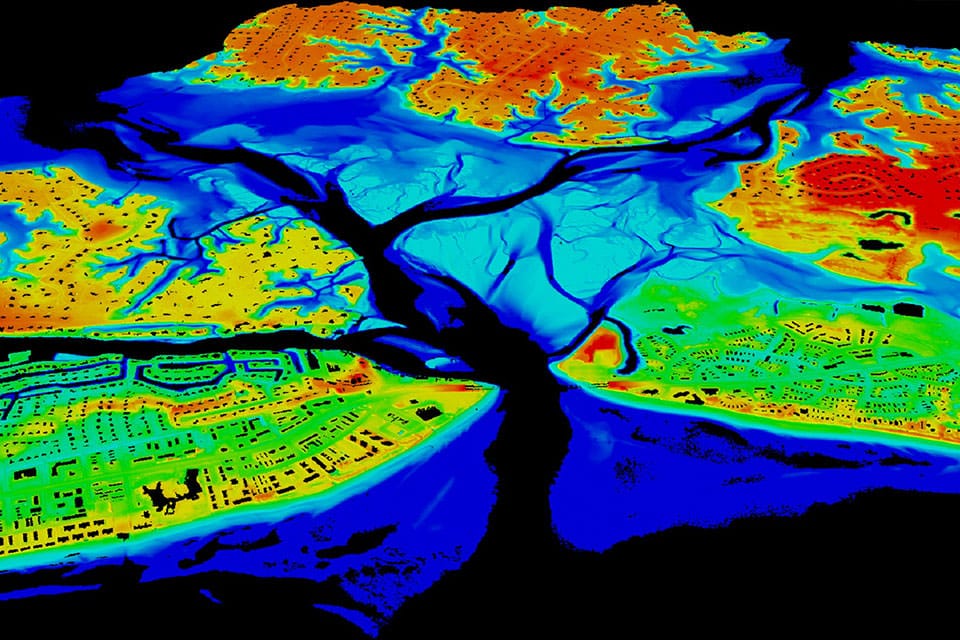

The pre-requisite is the phone should be ARCore enabled; the technology is supported on Android 7.0 (Nougat) and above. Firstly, using the phone’s camera AR system tries to locate the device’s position through a gyroscope, accelerometer, and other inbuilt sensors. Then it identifies the topography of the room, surroundings. This part includes determining the flat surfaces present in the room such as a table, board, stairs, etc. The third parameter taken into account is the ambient light or the source of light in the given surroundings. Taking these all into account the ARCore system gives the user a seamless experience. Recently, Google announced the Depth API feature to enhance the AR experience. Depth API is supported by ARCore 1.18 for Android and Unity and AR Foundation enabled devices. It basically creates a depth map like LiDAR which is an image that contains data about the distance, elevation from a given source. The depth map is created by using the device’s RGB camera. The feature will enable a more engaging and real-world experience. We have come a long way in discussing LiDAR in iPhone to AR milestones in Android devices. I hope you find this article helpful and resourceful. If you’ve any thoughts on Looking for LiDAR on Android? Google has ARCore as the Answer, then feel free to drop in below comment box. Also, please subscribe to our DigitBin YouTube channel for videos tutorials. Cheers!